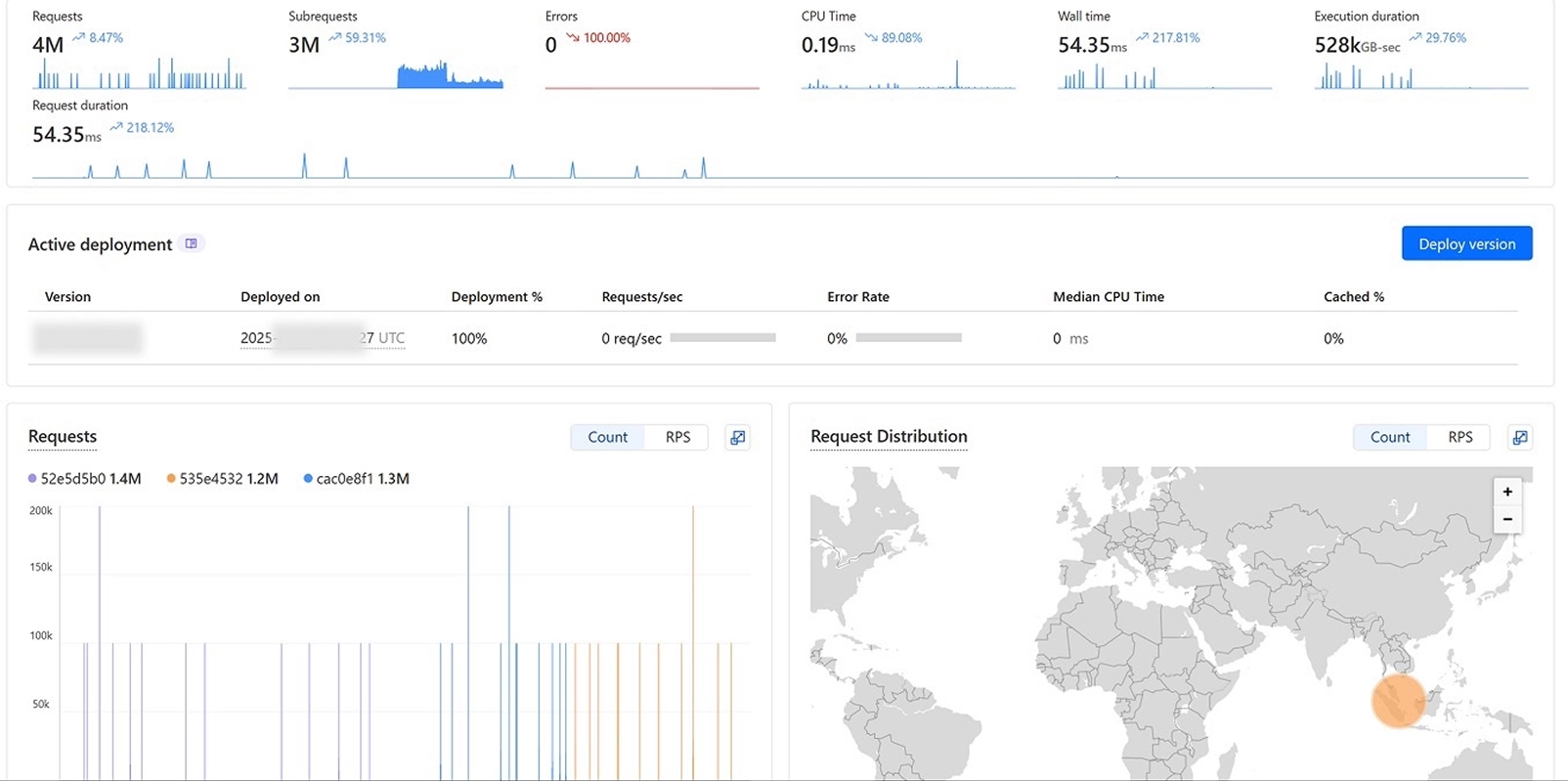

What if you could manage a fleet of over 300 trucks with real-time GPS tracking, all while keeping costs impressively low? When our team took on this challenge for an enterprise logistics client, we knew balancing performance with budget would require ingenuity. We had to rethink traditional architectures, push technical boundaries, and make strategic choices to build a robust system that processed over 4 million requests per month at an average latency of just 0.27 seconds—all for around $20/month.

In this blog, I'll walk you through our journey: the challenges we faced, the creative solutions we implemented, and the lessons learned along the way. Whether you're a developer, a tech enthusiast, or a business leader, you'll find valuable insights into building scalable, cost-efficient systems without compromising on performance.

📦 Background & Context: The Need for a Cost-Effective GPS Tracking System

Managing a large fleet requires precise, real-time data to optimize logistics, monitor vehicle health, and enhance operational efficiency. Traditional systems often rely on heavy cloud infrastructure, which can quickly escalate costs—especially when dealing with high data volumes and frequent requests.

Initially, we considered popular serverless offerings like AWS Lambda and DynamoDB. However, with 8 to 25 MB of GPS data per truck per booking cycle, the estimated expenses were daunting. We needed a solution that could handle massive data throughput while keeping our monthly budget between $15 and $20.

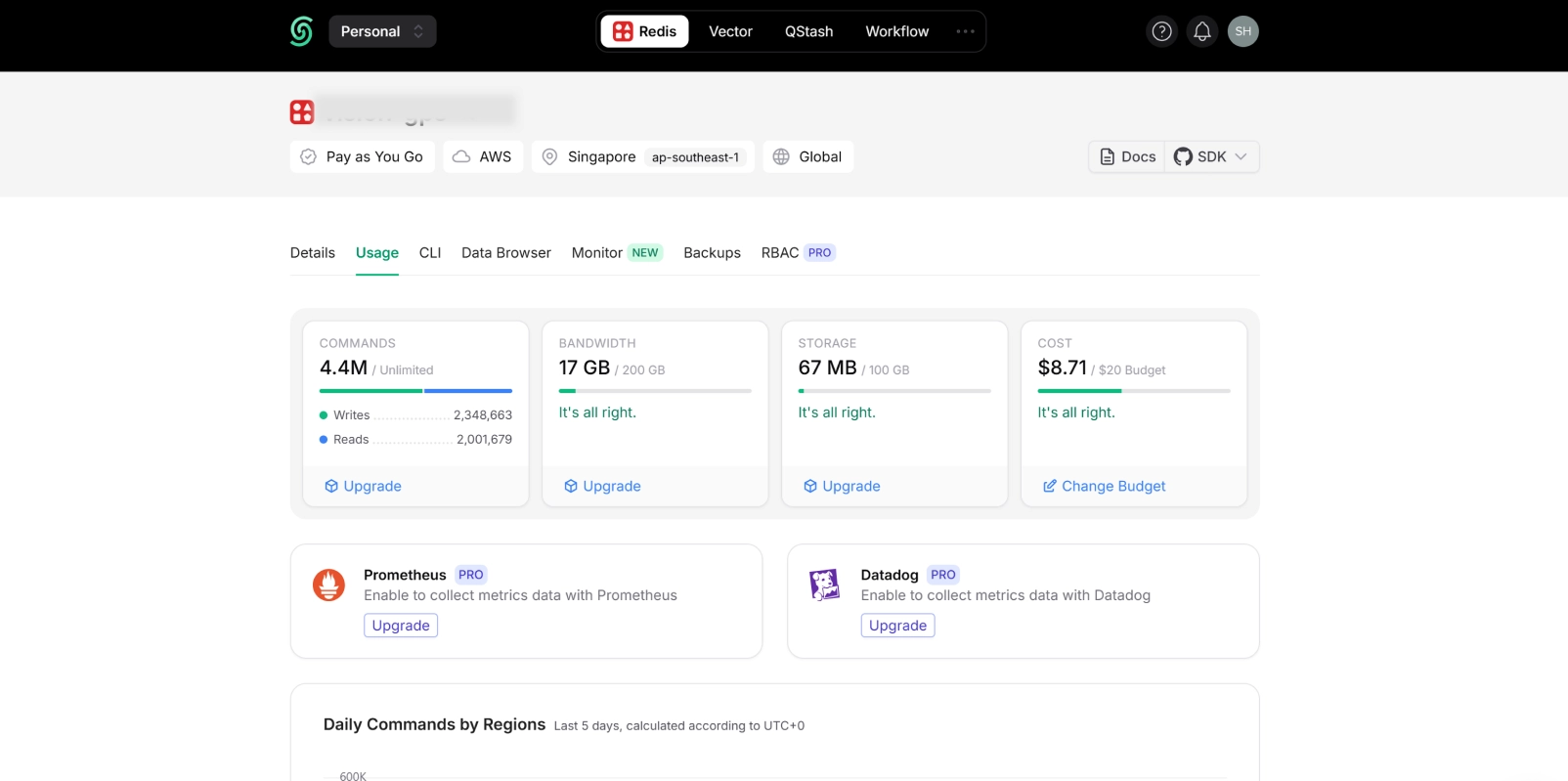

The real challenge arose with Upstash Redis. While its pay-as-you-go model was appealing, its 1 MB request size limit posed a hurdle. Given our requirement to process large data chunks, we needed to devise a strategy that would avoid unnecessary costs and maintain high performance.

🛠️ Main Content: Key Components of Our Live GPS Tracking Solution

1. Choosing Cloudflare Workers for Edge Computing

What We Did: We built our custom GPS tracking logic on Cloudflare Workers, utilizing edge computing to process requests quickly and affordably. Why It Worked: Edge deployment reduced latency and significantly lowered data processing costs compared to traditional cloud solutions.

2. Implementing Upstash Redis for Real-Time Data Storage

What We Did: Selected Upstash Redis for its multi-region support and Singapore node, ensuring fast access to data. Key Challenge: The 1 MB per-command limit required creative data management strategies to avoid overspending.

3. Data Chunking & Metadata Strategy

Our Approach: We split large GPS data into manageable 800 KB chunks, using a structured key format like {truckID}:chunk:. The Impact: This chunking approach allowed us to execute multiple small commands instead of a single costly large command, adhering to the 1 MB limit.

4. Reducing Redis Command Overhead with Lua Scripting

How It Helped: By combining multiple operations into a single Lua script, we reduced the number of Redis commands by 50–60%, keeping costs low.

5. Optimizing Data Storage Techniques

Key Techniques: Shortened keys (e.g., m:<plate_number>), used Redis hashes over JSON strings, and implemented local metadata caching to minimize unnecessary reads.

🔍 Step-by-Step Guide: How to Build a Cost-Effective GPS Tracking System

- Analyze Data Requirements: Calculate the average size of GPS data and expected request volume.

- Choose Scalable Tools: Evaluate edge computing options like Cloudflare Workers for low-latency processing.

- Design Efficient Data Flows: Implement chunking strategies to handle large datasets within request limits.

- Optimize Command Execution: Use Lua scripting to reduce Redis command usage.

- Monitor & Iterate: Regularly check metrics and fine-tune for performance and cost savings.

Pro Tip: Implement local caching wherever possible to minimize external data fetches and reduce costs further.

📈 Benefits & Practical Applications: Real-World Impact of Our Solution

- Massive Cost Savings: Achieved 4M+ requests/month with costs as low as $20/month.

- Fast & Reliable: Maintained an average response time of 0.27 seconds, enhancing user experience.

- Scalability: The architecture allowed dynamic scaling with fluctuating request volumes without performance dips.

By optimizing our approach, we turned what could have been a costly project into a model of cost-efficiency and performance. This methodology can be adapted to any high-frequency data system, from logistics to real-time analytics platforms.

❓ Common Questions & Misconceptions

Q: Can serverless systems really handle enterprise-grade workloads? A: Absolutely! The key is to combine edge computing with smart data management strategies, as demonstrated in this project.

Q: How do you manage costs when scaling up? A: Focus on atomic operations, optimize data structures, and choose pay-as-you-go models where possible.

Q: What if my data sizes exceed storage limits? A: Implement chunking and use metadata to manage and retrieve large datasets efficiently.

💡 Conclusion: Mastering Cost-Efficiency in High-Performance Systems

Building a scalable and cost-effective live GPS tracking system for 300+ trucks was a challenging yet rewarding journey. By combining Cloudflare Workers with Upstash Redis, we achieved remarkable performance at minimal cost. The key takeaway? Creative problem-solving and a focus on simplicity often lead to the best outcomes.

If you're working on a real-time data system or need to manage high-frequency requests on a budget, I hope our experience provides valuable insights. There's immense potential in rethinking traditional architectures and exploring new tools that offer both scalability and affordability.

Let's Build Cost-Effective Solutions Together!

Are you facing challenges with scaling and budgeting in your tech projects? Share your thoughts, ask questions, or collaborate on creating innovative, cost-effective solutions.

Jackson Kasi

Technical LeadSelf-taught tech enthusiast with a passion for continuous learning and innovative solutions.

Suggested Articles